01

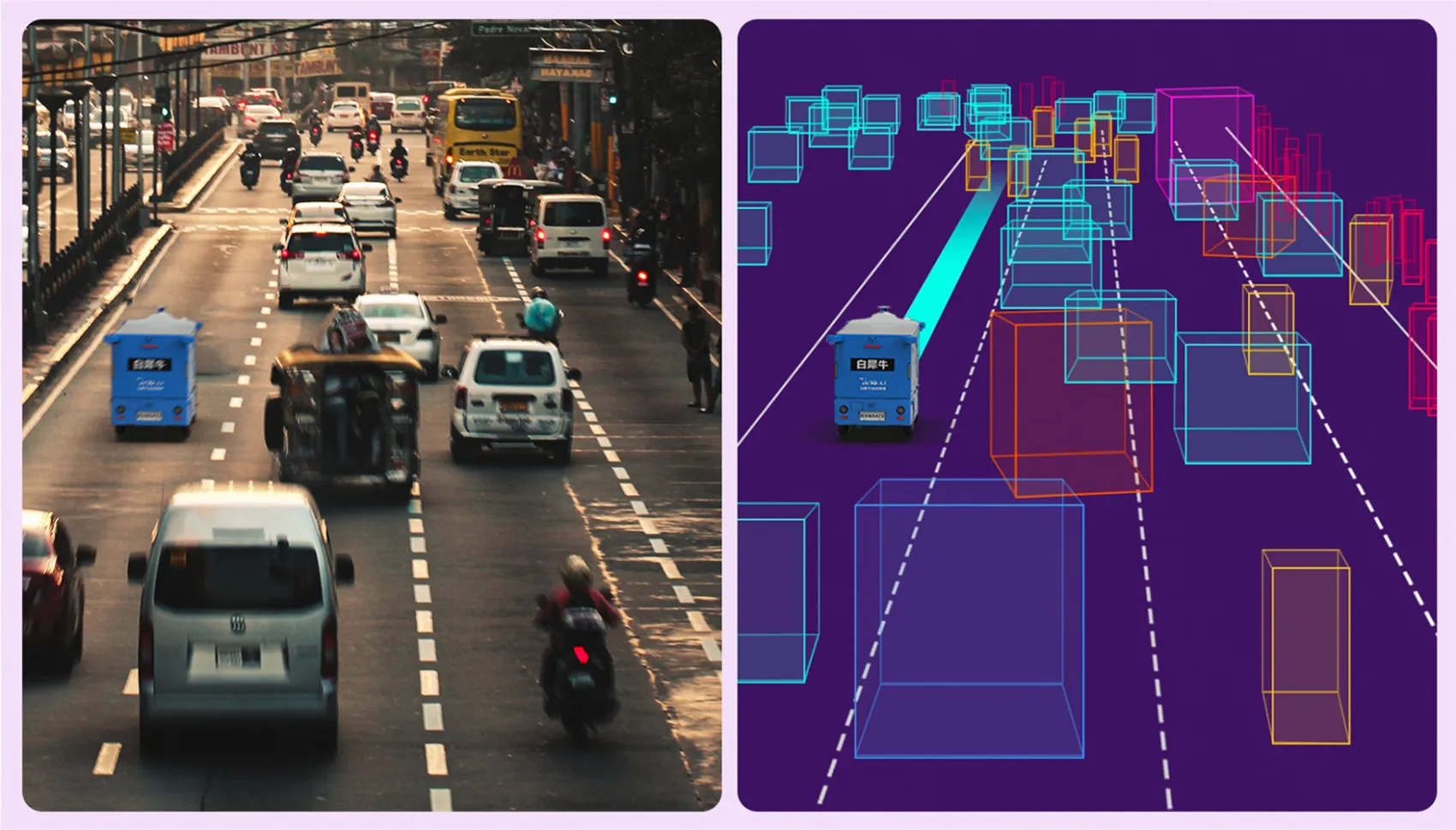

Perception One Model

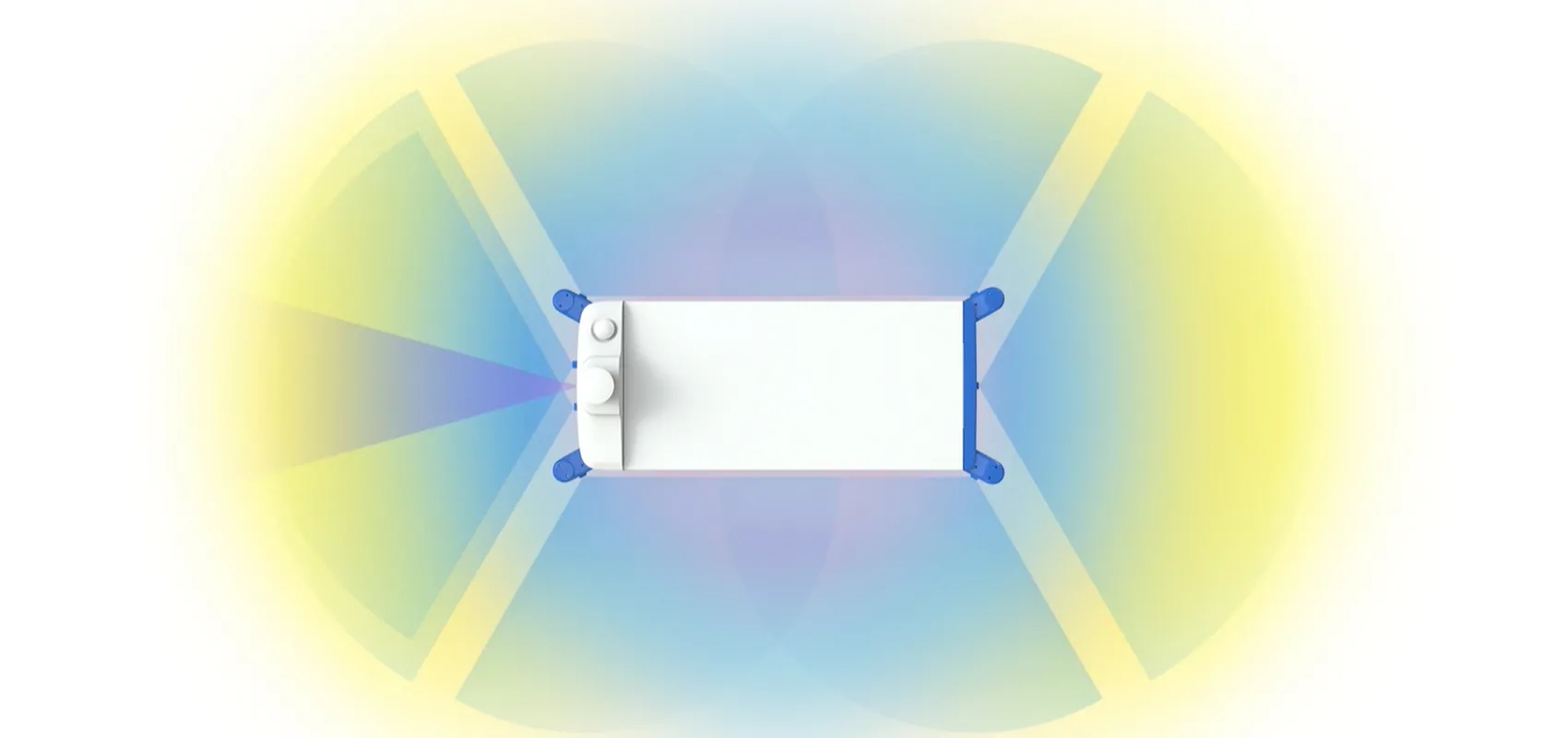

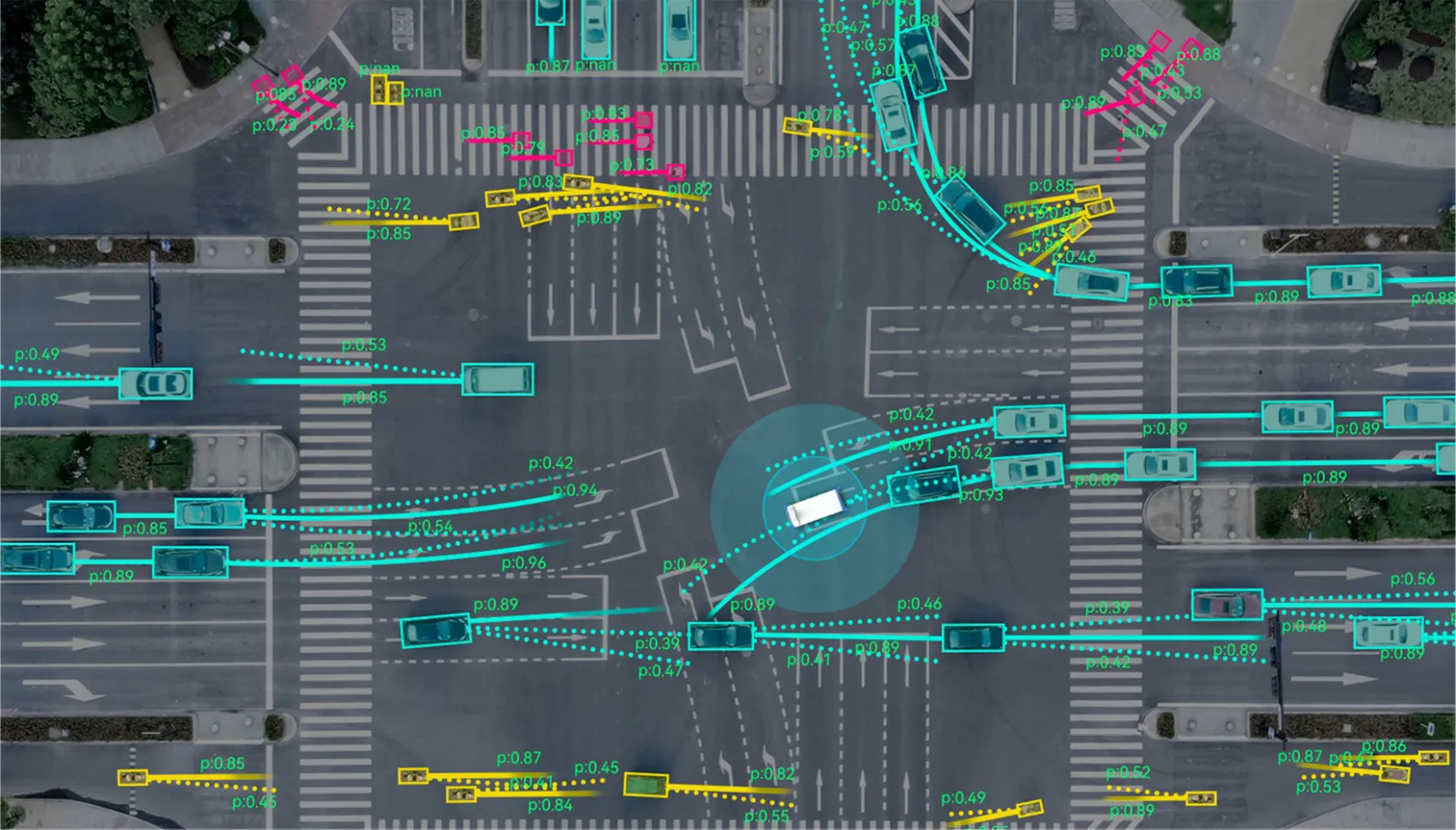

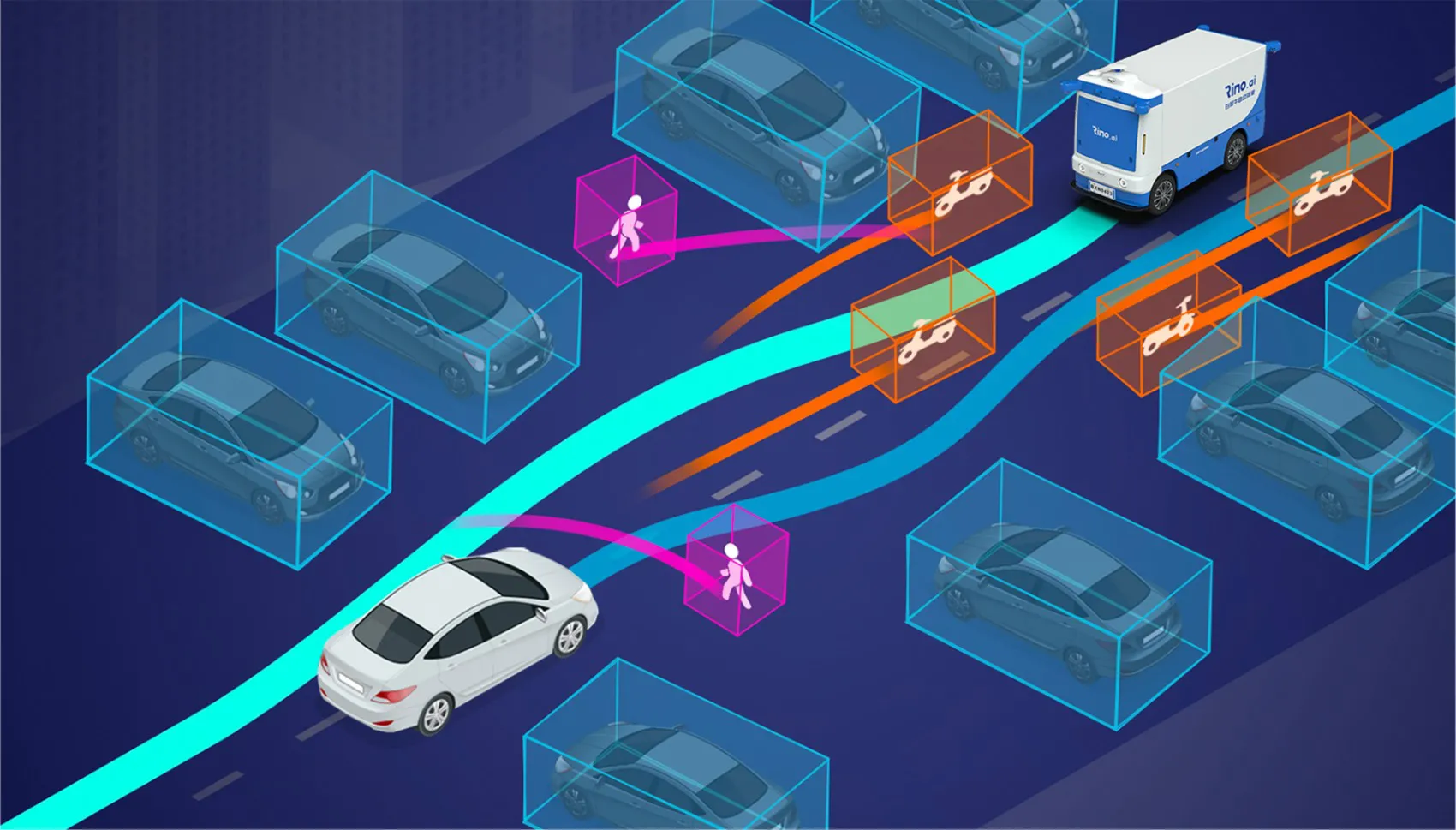

Building upon the BEV + Transformer design paradigm, this unified model integrates functionalities such as obstacle detection, general occupancy (Occ) prediction, lane element detection, map topology reasoning, object tracking, and speed estimation to enable efficient, data-driven perception.

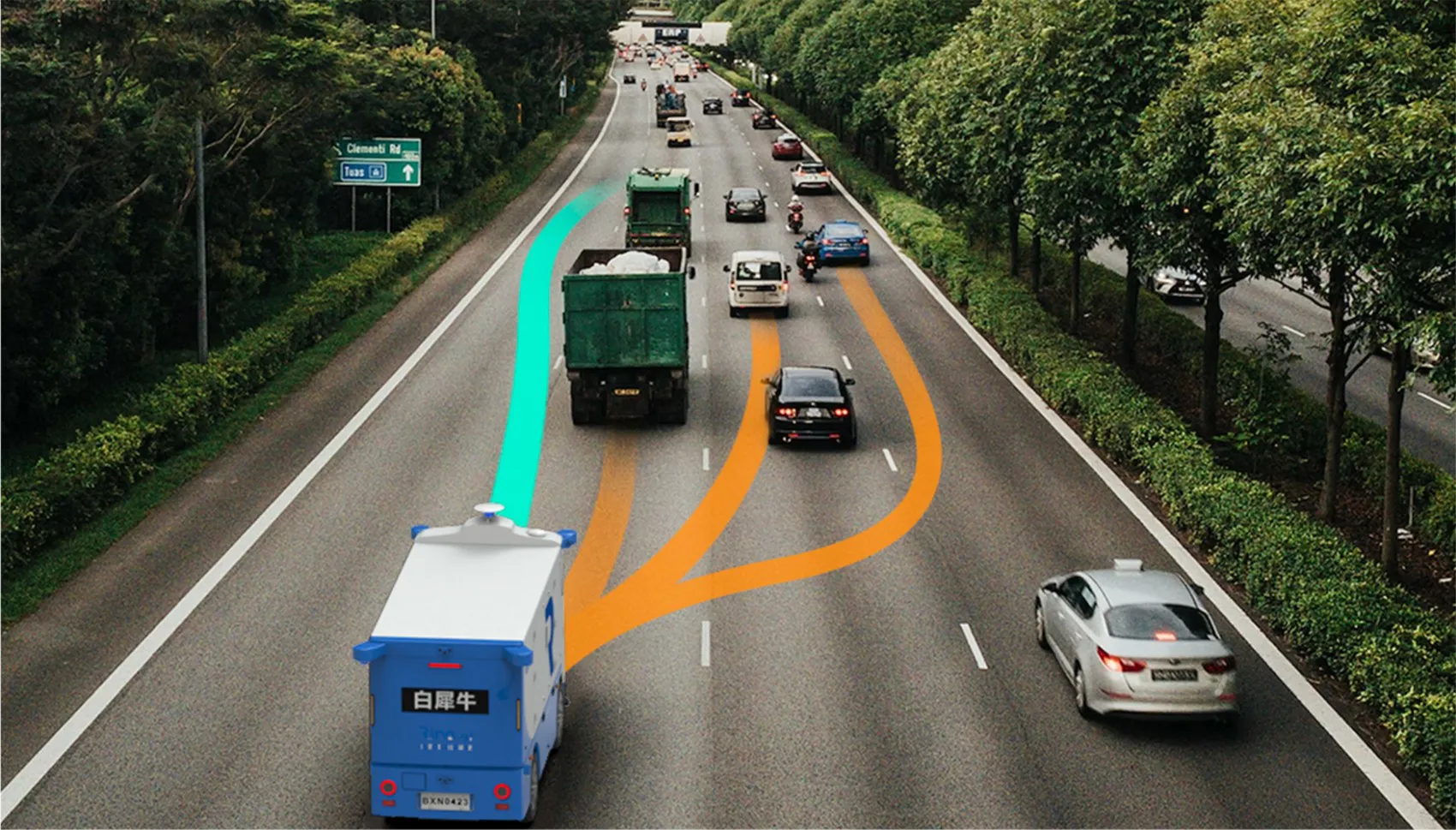

Vehicles are equipped with cameras and LiDAR, providing long-range perception in high-speed scenarios and wide-angle views in complex environments. Multi-sensor fusion allows perception through rain, fog, and dust, maintaining a “clear and complete” 360° view of the surroundings, enhancing driving safety.

Capable of autonomously recognizing various types of traffic lights, enabling smooth navigation through intersections without reliance on HD maps.

02

Model and Data Closed-Loop System

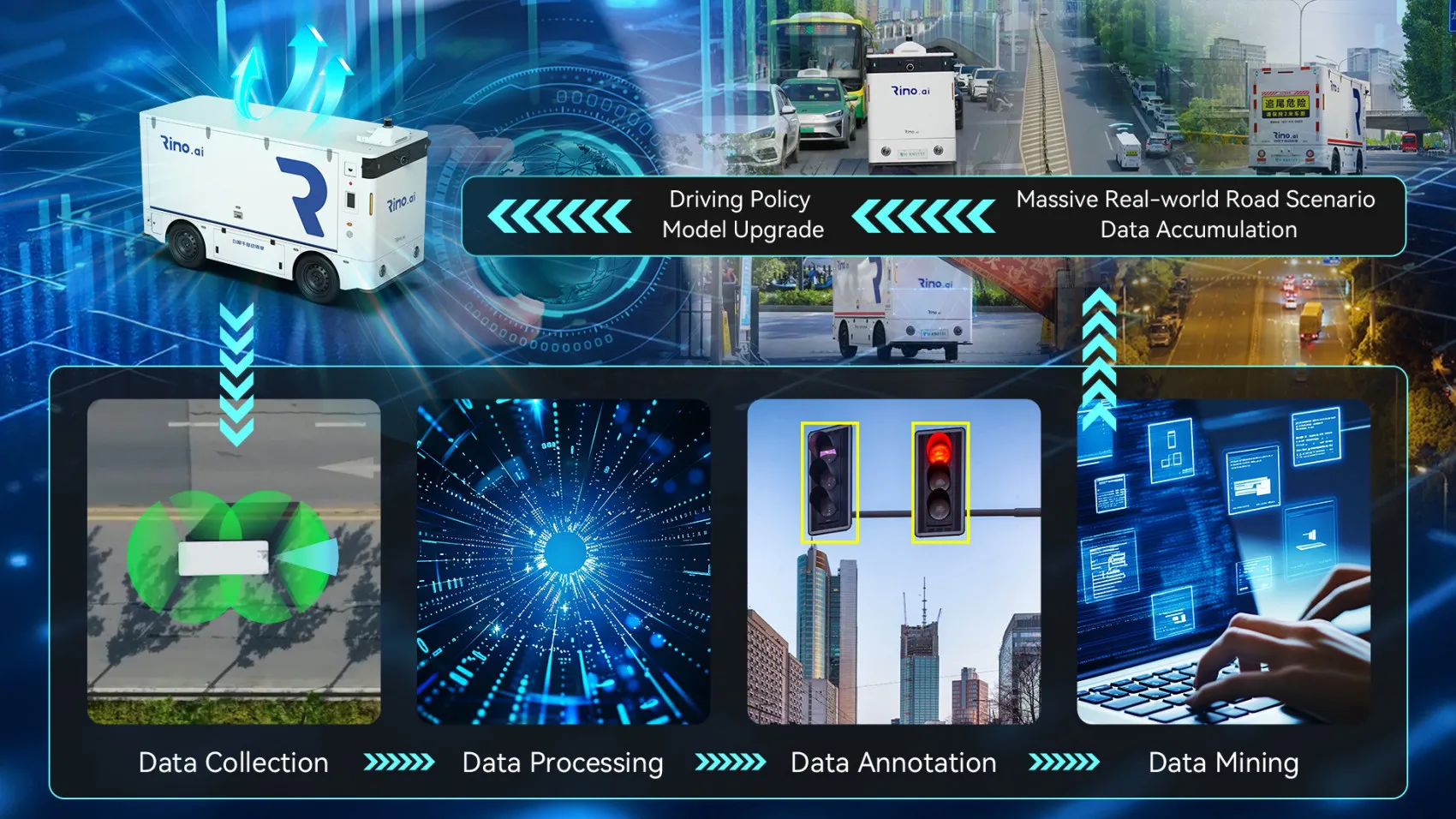

Leveraging an industrial-grade deep learning platform to enable efficient and automated data and model production.

Enables automated, closed-loop management of the full data lifecycle — from data collection, mining, processing, and annotation to data asset management. By accumulating multi-dimensional scenario data and uncovering deep data value, the platform continuously fuels the evolution of AI capabilities with massive volumes of real-world data.

Built on a high-performance architecture, the platform enables large-scale distributed parallel processing to accelerate model iteration. It features deep optimization of core operators such as Sparse Transformer to reduce computational costs, and supports automated model adaptation, quantization, and compression.

Digitally replicates real-world driving scenarios to build highly realistic system models, enabling 7*24h simulation and testing. It ensures efficient and reliable validation of autonomous driving system updates.

Enables full-domain over-the-air updates for autonomous driving systems, high-definition maps, and driving model algorithms, ensuring continuous performance enhancement. Engineered for exceptional stability and consistency to guarantee secure and reliable OTA upgrades.

03

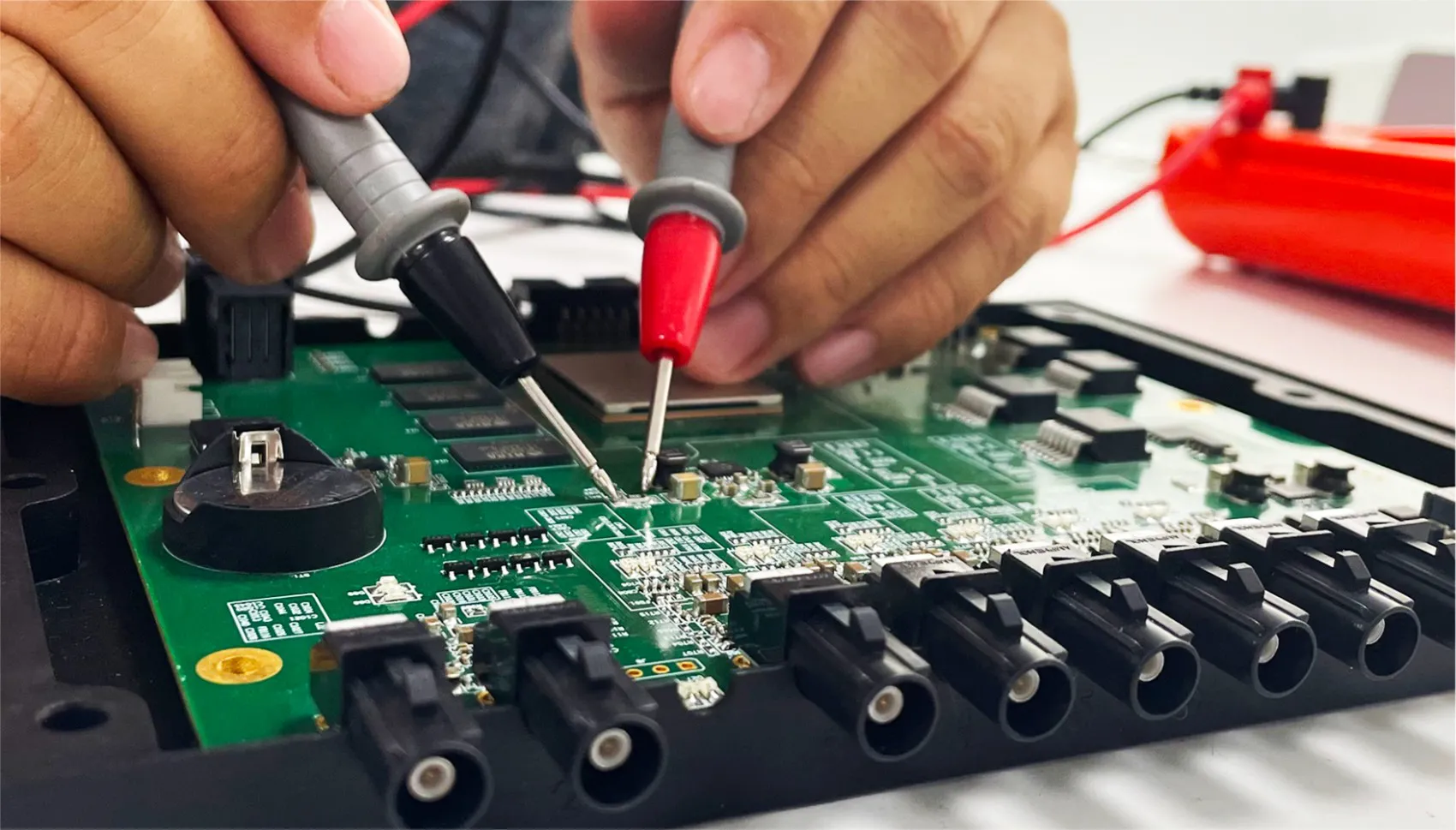

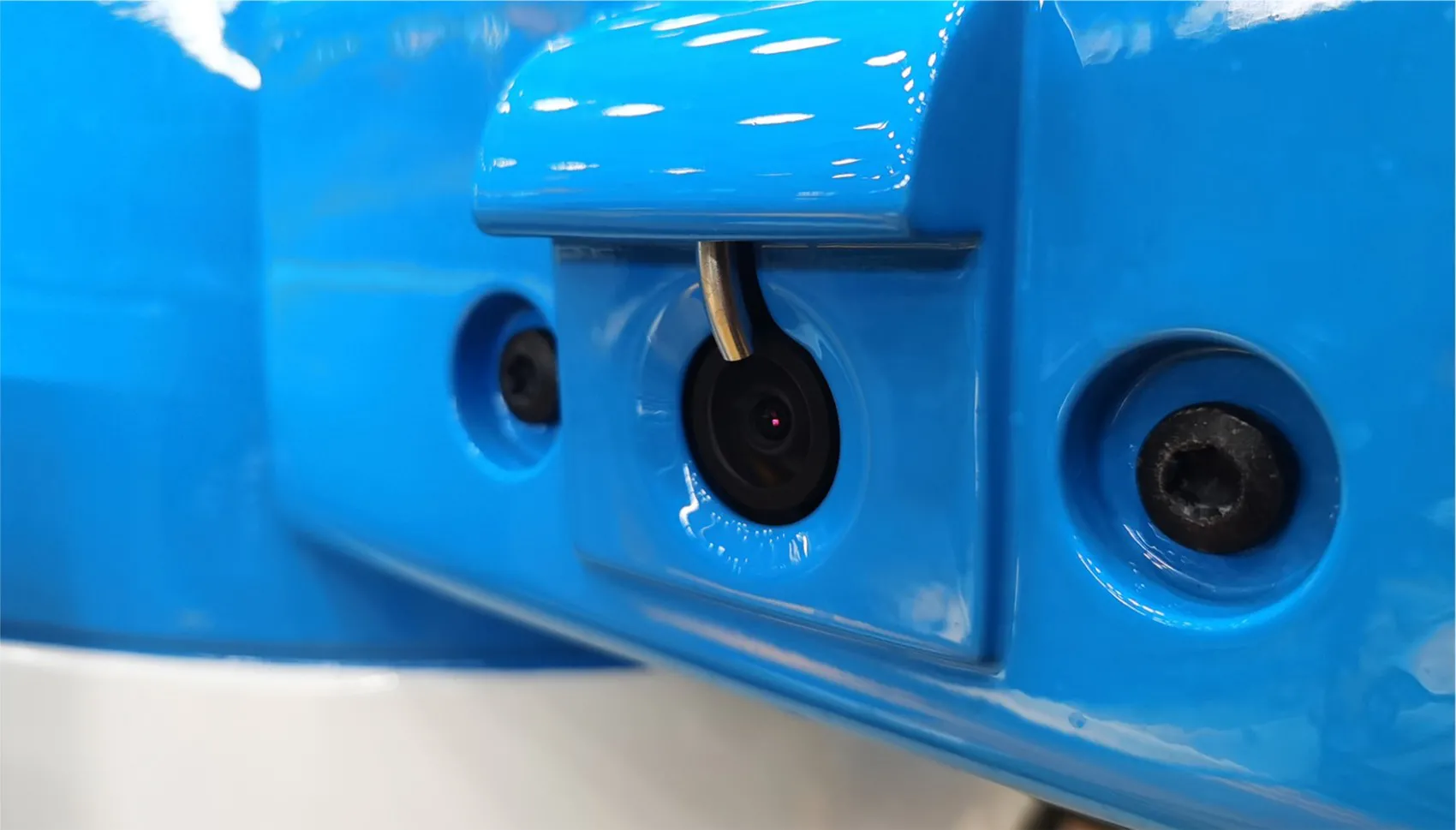

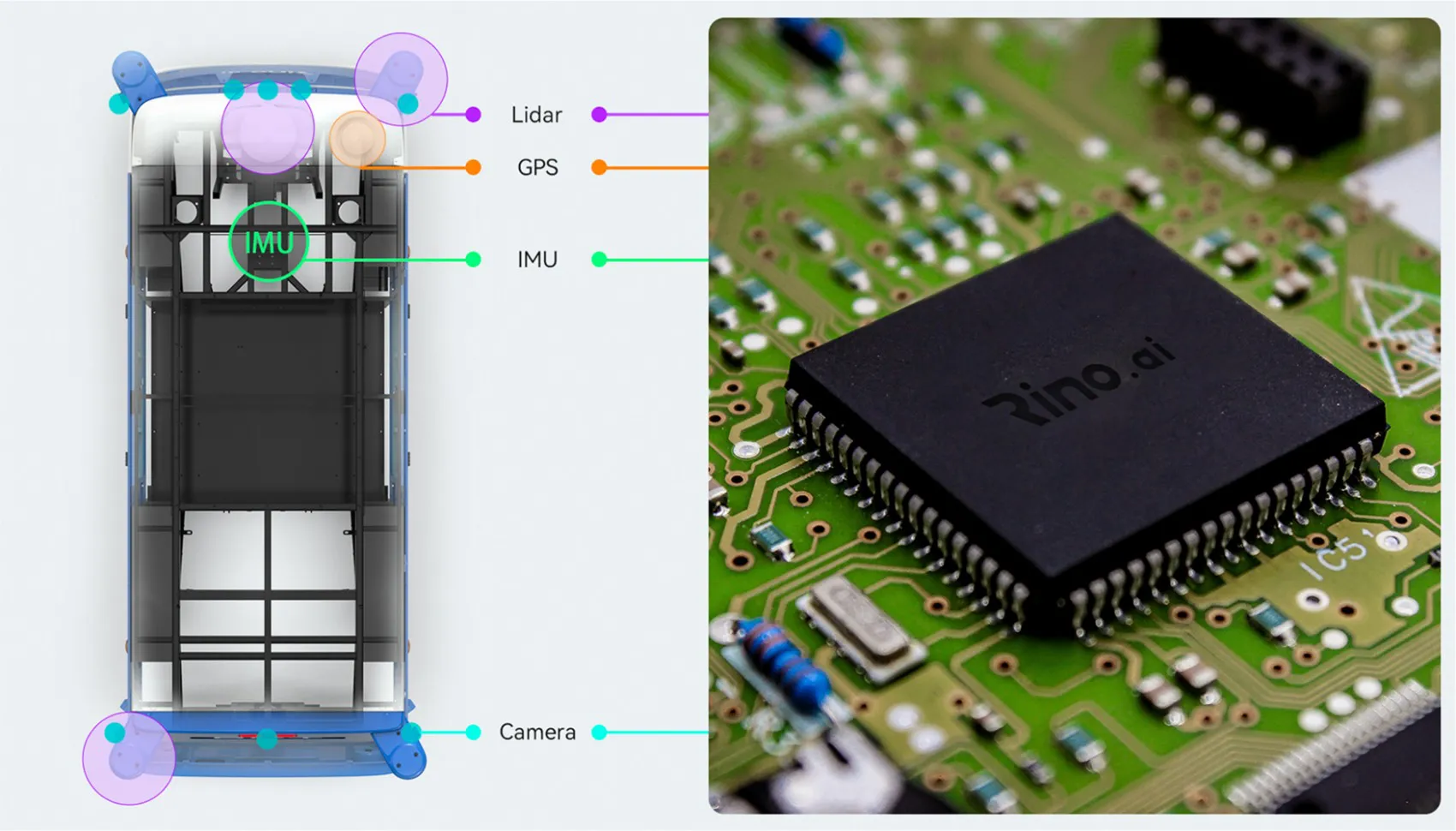

Self-Developed Intelligent Autonomous Driving Hardware

Independently developed hardware including dedicated computing units, multimodal sensor suites, high-reliability communication modules (4G/5G+GNSS), and drive-by-wire chassis systems. A vehicle-grade electrical and electronic architecture is developed with redundant designs and fault isolation mechanisms to ensure safe and stable vehicle operation in extreme environments.

Supports synchronized triggering, data acquisition, and preprocessing for multimodal sensors, improving real-time performance of the computing platform.

In-house high-performance, highly reliable, low-power, and cost-effective computing platform.

Self-developed high-precision localization device that fuses data from IMU, CAN bus, GNSS, LiDAR, and camera sensors

Integrates electrical devices, LiDARs, and cameras for rapid deployment.

Developed an air-knife cleaning system for rain and snow, enhancing all-weather operational capability.

Utilizes voice or light signals to alert pedestrians and vehicles based on the surrounding scenario.

04

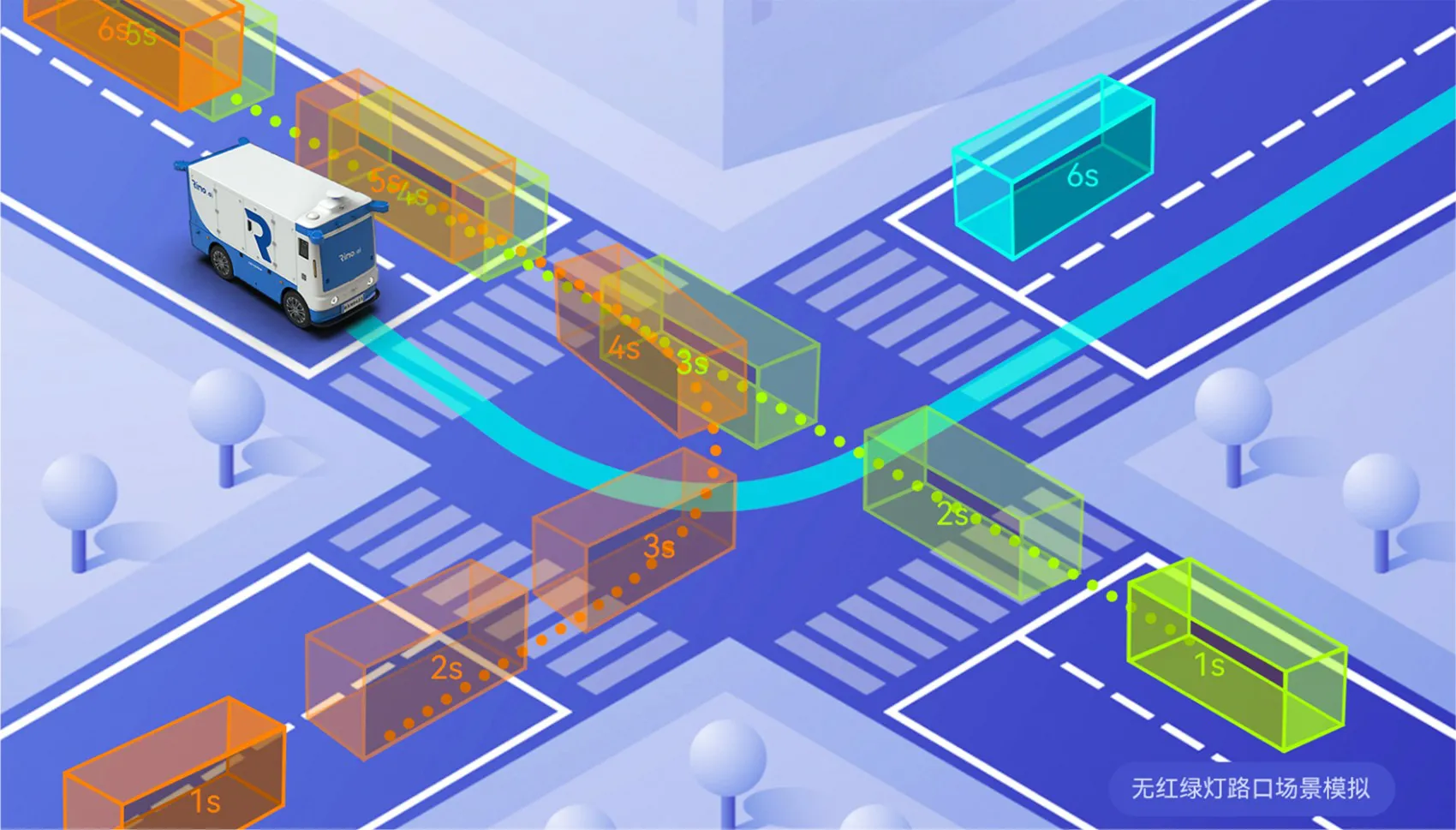

Prediction, Decision-making, and Planning

Built on a Transformer-based architecture, our system leverages Vision Transformer (ViT) models to understand complex scene semantics, such as multi-directional vehicle interactions at intersections and outputs a risk probability matrix. Pre-trained on over 100 billion trajectory data points, it enables long-horizon trajectory prediction at 0.1-second resolution, supporting dynamic, game-theoretic decision-making. This allows the vehicle to generate safe and efficient driving paths in real time.

Seamlessly unifies spatial and temporal data to generate trajectories that adapt to complex road conditions and mimic natural human driving behavior.

Incorporates probabilistic models to account for data uncertainties from upstream and downstream modules, enhancing the robustness of planning under real-world conditions.

Vehicles learn human driving behavior through interaction with the environment, enabling flexible responses in interactive or adversarial scenarios and significantly improving traffic efficiency.

Utilizes nonlinear feedback models to significantly enhance control precision, especially under complex conditions such as sharp curves and dynamic environments.

05

Cloud-Based Simulation Platform

Rino.ai’s cloud-based platform features a closed-loop simulation system powered by world models to train and evaluate vehicle-side models. It prioritizes reconstruction of static scenes and integrates dynamic elements under physical constraints to rebuild and render complete scenarios, greatly facilitating corner case data collection and model enhancement.

Utilizing real store and delivery point data to simulate traffic participants and conduct comprehensive testing of autonomous driving systems. Supporting various forms of development and research, including single-vehicle and multi-vehicle intelligence.

High-value scenario data from real-world road testing can be automatically transformed into LogSim-compatible ADS (Autonomous Driving Simulation) formats. After being curated by test engineers or filtered through data mining, these scenarios are seamlessly converted for reuse—greatly streamlining regression testing and accelerating development cycles.

For high-cost, high-risk, or rare edge-case scenarios, WorldSim enables flexible editing and scene design within the simulation environment; Fuzzing technology enables efficient bulk scenario generation, further supporting ADS creation and autonomous driving parameter training.

Simulates camera, LiDAR, radar, GPS, and IMU at physical signal. Enables simulation testing at physical, raw, and object-level signal layers to meet varying validation needs

06

Intelligent Dispatch Platform

Dispatch Platform is an intelligent operations tool designed specifically for autonomous vehicles. Rino.ai’s dispatch platform integrates optimal route planning, vehicle management apps, and high-stability architecture to enable efficient digital fleet operation.

Processes real-time traffic flow, road conditions (e.g., congestion, accidents, construction), task distribution, and vehicle status (location, battery level) to compute globally optimal or near-optimal vehicle-task matching and routes.

Enables task dispatch and vehicle management, with real-time access to vehicle status, routes, live camera feeds, and task notifications—streamlining operations and maximizing efficiency.

Built on a highly reliable, high-concurrency, and scalable architecture to support 7*24h stable operations of large fleets in complex urban environments, with smooth handling of business growth.

07

Safety Architecture

We’ve built full-process safety protection system spanning "design-operation-verification". By employing hardware redundancy architecture, continuous intelligent algorithm iteration, real-time status monitoring, and rigorous compliance verification, we establish a comprehensive multi-layered defense system encompassing both the physical and logical layers. This framework guarantees the safe and reliable operation of the system throughout its entire lifecycle.